openEuler 20.03 (LTS-SP2) aarch64 cephadm 部署ceph18.2.0【4】 添加mon节点 manifest unknown(bug?)

接上篇当前状态。

接上篇

openEuler 20.03 (LTS-SP2) aarch64 cephadm 部署ceph18.2.0【1】离线部署 准备基础环境-CSDN博客

openEuler 20.03 (LTS-SP2) aarch64 cephadm 部署ceph18.2.0【3】bootstrap 解决firewalld防火墙导致的故障-CSDN博客

当前状态

ceph orch host ls

重新bootstrap

由于之前10-2.1.176主机名未设置,执行了bootstrap。为了保持主机名一致性,删除所有数据,重新bootstrap(应注意第一次bootstrap前确认采用hostnamectl设置了正确的主机名)

删除集群

podman ps | grep -v CONTAINER | awk '{print $1}' | xargs podman rm -f

rm /etc/ceph/ -rf

rm /var/lib/ceph -rf

rm /var/log/ceph/ -rf

systemctl restart podman

搭建registry私服

podman load -i podman-images/registry-2.tar

# 删除旧数据

rm -rf /var/lib/registry

mkdir -p /var/lib/registry

podman run --privileged -d --name registry -p 5000:5000 -v /var/lib/registry:/var/lib/registry --restart=always registry:2推送镜像

podman push 10.2.1.176:5000/quay.io/ceph/ceph:v18.2.0

podman push 10.2.1.176:5000/quay.io/prometheus/prometheus:v2.43.0

podman push 10.2.1.176:5000/docker.io/grafana/loki:2.4.0

podman push 10.2.1.176:5000/docker.io/grafana/promtail:2.4.0

podman push 10.2.1.176:5000/quay.io/prometheus/node-exporter:v1.5.0

podman push 10.2.1.176:5000/quay.io/prometheus/alertmanager:v0.25.0

podman push 10.2.1.176:5000/quay.io/ceph/ceph-grafana:9.4.7

podman push 10.2.1.176:5000/quay.io/ceph/haproxy:2.3

podman push 10.2.1.176:5000/quay.io/ceph/keepalived:2.2.4

podman push 10.2.1.176:5000/docker.io/maxwo/snmp-notifier:v1.2.1

podman push 10.2.1.176:5000/quay.io/omrizeneva/elasticsearch:6.8.23

podman push 10.2.1.176:5000/quay.io/jaegertracing/jaeger-collector:1.29

podman push 10.2.1.176:5000/quay.io/jaegertracing/jaeger-agent:1.29

podman push 10.2.1.176:5000/quay.io/jaegertracing/jaeger-query:1.29bootstrap

[root@ceph-176 ~]#

[root@ceph-176 ~]# cephadm --image 10.2.1.176:5000/quay.io/ceph/ceph:v18.2.0 bootstrap --registry-url=10.2.1.176:5000 --registry-username=x --registry-password=x --mon-ip 10.2.1.176 --log-to-file

Verifying podman|docker is present...

Verifying lvm2 is present...

Verifying time synchronization is in place...

Unit ntpd.service is enabled and running

Repeating the final host check...

podman (/usr/bin/podman) version 3.4.4 is present

systemctl is present

lvcreate is present

Unit ntpd.service is enabled and running

Host looks OK

Cluster fsid: 75dc1df2-97e8-11ee-8a99-faa4b605ed00

Verifying IP 10.2.1.176 port 3300 ...

Verifying IP 10.2.1.176 port 6789 ...

Mon IP `10.2.1.176` is in CIDR network `10.2.1.0/24`

Mon IP `10.2.1.176` is in CIDR network `10.2.1.0/24`

Internal network (--cluster-network) has not been provided, OSD replication will default to the public_network

Logging into custom registry.

Pulling container image 10.2.1.176:5000/quay.io/ceph/ceph:v18.2.0...

Ceph version: ceph version 18.2.0 (5dd24139a1eada541a3bc16b6941c5dde975e26d) reef (stable)

Extracting ceph user uid/gid from container image...

Creating initial keys...

Creating initial monmap...

Creating mon...

firewalld ready

Waiting for mon to start...

Waiting for mon...

mon is available

Assimilating anything we can from ceph.conf...

Generating new minimal ceph.conf...

Restarting the monitor...

Setting mon public_network to 10.2.1.0/24

Wrote config to /etc/ceph/ceph.conf

Wrote keyring to /etc/ceph/ceph.client.admin.keyring

Creating mgr...

Verifying port 9283 ...

Verifying port 8765 ...

Verifying port 8443 ...

firewalld ready

firewalld ready

Waiting for mgr to start...

Waiting for mgr...

mgr not available, waiting (1/15)...

mgr not available, waiting (2/15)...

mgr not available, waiting (3/15)...

mgr not available, waiting (4/15)...

mgr is available

Enabling cephadm module...

Waiting for the mgr to restart...

Waiting for mgr epoch 5...

mgr epoch 5 is available

Setting orchestrator backend to cephadm...

Generating ssh key...

Wrote public SSH key to /etc/ceph/ceph.pub

Adding key to root@localhost authorized_keys...

Adding host ceph-176...

Deploying mon service with default placement...

Deploying mgr service with default placement...

Deploying crash service with default placement...

Deploying ceph-exporter service with default placement...

Deploying prometheus service with default placement...

Deploying grafana service with default placement...

Deploying node-exporter service with default placement...

Deploying alertmanager service with default placement...

Enabling the dashboard module...

Waiting for the mgr to restart...

Waiting for mgr epoch 9...

mgr epoch 9 is available

Generating a dashboard self-signed certificate...

Creating initial admin user...

Fetching dashboard port number...

firewalld ready

Ceph Dashboard is now available at:

URL: https://ceph-176:8443/

User: admin

Password: wont7z1rg0

Enabling client.admin keyring and conf on hosts with "admin" label

Saving cluster configuration to /var/lib/ceph/75dc1df2-97e8-11ee-8a99-faa4b605ed00/config directory

Enabling autotune for osd_memory_target

You can access the Ceph CLI as following in case of multi-cluster or non-default config:

sudo /usr/local/sbin/cephadm shell --fsid 75dc1df2-97e8-11ee-8a99-faa4b605ed00 -c /etc/ceph/ceph.conf -k /etc/ceph/ceph.client.admin.keyring

Or, if you are only running a single cluster on this host:

sudo /usr/local/sbin/cephadm shell

Please consider enabling telemetry to help improve Ceph:

ceph telemetry on

For more information see:

https://docs.ceph.com/en/latest/mgr/telemetry/

Bootstrap complete.

折腾这么猛,就为改个名字。。。

设置容器镜像均采用内网服务器地址

ceph config set mgr mgr/cephadm/container_image_alertmanager 10.2.1.176:5000/quay.io/prometheus/alertmanager:v0.25.0

ceph config set mgr mgr/cephadm/container_image_base 10.2.1.176:5000/quay.io/ceph/ceph:v18.2.0

ceph config set mgr mgr/cephadm/container_image_elasticsearch 10.2.1.176:5000/quay.io/omrizeneva/elasticsearch:6.8.23

ceph config set mgr mgr/cephadm/container_image_grafana 10.2.1.176:5000/quay.io/ceph/ceph-grafana:9.4.7

ceph config set mgr mgr/cephadm/container_image_haproxy 10.2.1.176:5000/quay.io/ceph/haproxy:2.3

ceph config set mgr mgr/cephadm/container_image_jaeger_agent 10.2.1.176:5000/quay.io/jaegertracing/jaeger-agent:1.29

ceph config set mgr mgr/cephadm/container_image_jaeger_collector 10.2.1.176:5000/quay.io/jaegertracing/jaeger-collector:1.29

ceph config set mgr mgr/cephadm/container_image_jaeger_query 10.2.1.176:5000/quay.io/jaegertracing/jaeger-query:1.29

ceph config set mgr mgr/cephadm/container_image_keepalived 10.2.1.176:5000/quay.io/ceph/keepalived:2.2.4

ceph config set mgr mgr/cephadm/container_image_loki 10.2.1.176:5000/docker.io/grafana/loki:2.4.0

ceph config set mgr mgr/cephadm/container_image_node_exporter 10.2.1.176:5000/quay.io/prometheus/node-exporter:v1.5.0

ceph config set mgr mgr/cephadm/container_image_prometheus 10.2.1.176:5000/quay.io/prometheus/prometheus:v2.43.0

ceph config set mgr mgr/cephadm/container_image_promtail 10.2.1.176:5000/docker.io/grafana/promtail:2.4.0ceph orch redeploy prometheus

ceph orch redeploy grafana

ceph orch redeploy alertmanager

ceph orch redeploy node-exporter似乎这个时候不需要重新部署

[ceph: root@ceph-176 /]# ceph orch redeploy prometheusError EINVAL: No daemons exist under service name "prometheus". View currently running services using "ceph orch ls"

[ceph: root@ceph-176 /]# ceph orch redeploy grafana

Error EINVAL: No daemons exist under service name "grafana". View currently running services using "ceph orch ls"

[ceph: root@ceph-176 /]# ceph orch redeploy alertmanager

Error EINVAL: No daemons exist under service name "alertmanager". View currently running services using "ceph orch ls"

[ceph: root@ceph-176 /]# ceph orch redeploy node-exporter

Error EINVAL: No daemons exist under service name "node-exporter". View currently running services using "ceph orch ls"

启动非常慢!

添加host

查看当前情况

[ceph: root@ceph-176 /]# ceph orch host ls

HOST ADDR LABELS STATUS

ceph-176 10.2.1.176 _admin

1 hosts in cluster

配置免密登录容器内)

ceph cephadm get-pub-key > ~/ceph.pub

ssh-copy-id -f -i ~/ceph.pub root@ceph-191

ssh-copy-id -f -i ~/ceph.pub root@ceph-219添加host

ceph orch host add ceph-191 10.2.1.191 --labels _main

ceph orch host add ceph-219 10.2.1.219 --labels _main

ceph orch host ls

添加mon

重启registry!(非常重要)

目测测试看,registry启动后,导入镜像,需要重新容器,否则防火墙规则不生效,导致其他节点podman login报错!

podman restart registry

程序直接卡主!重启时报错

故障:再次添加mon(unknown: manifest unknown)

ceph orch daemon add mon ceph-191:10.2.1.191

查看registry中存储的ceph sha256

对比镜像信息,发现对不上(不明白)

podman inspect 10.2.1.176:5000/quay.io/ceph/ceph:v18.2.0

设置container_image(无效!)

尝试修改 container_image参数,不适用sha256,使用镜像标签

ceph config set global container_image 10.2.1.176:5000/quay.io/ceph/ceph:v18.2.0设置无效!

只能研究为什么push到私有仓库sha256发生变化了

curl 10.2.1.176:5000/v2/quay.io/ceph/ceph/manifests/v18.2.0 2>&1 | grep sha256

文心一言的回答(似乎在之前x86_64上并没有出现此问题)

搭建nfs,共享镜像过来,手动导入

服务端nfs

yum install nfs-utils配置/etc/exports(由于在/root目录下,必须配置no_root_squash,否则客户端无权限访问,挂载失败)

/root/podman-images 10.2.1.0/24(rw,sync,no_root_squash,no_subtree_check)

重启nfs

systemctl restart nfs客户端191挂载

mkdir /mnt/nfsmount

mount.nfs 10.2.1.176:/root/podman-images /mnt/nfsmount/导入镜像,手动tag

[root@ceph-191 nfsmount]# podman rmi 10.2.1.176:5000/quay.io/ceph/ceph:v18.2.0

Untagged: 10.2.1.176:5000/quay.io/ceph/ceph:v18.2.0

Deleted: efeefa2a9c1238e831b9ee459aaca5772502a17bc0e9a13b653d4c56cf38ac13

[root@ceph-191 nfsmount]# podman load -i ceph-v18.2.0.tar

Getting image source signatures

Copying blob 687806ababb3 done

Copying blob 148057ff0bd2 done

Copying config efeefa2a9c done

Writing manifest to image destination

Storing signatures

Loaded image(s): quay.io/ceph/ceph:v18.2.0

[root@ceph-191 nfsmount]# docker tag quay.io/ceph/ceph:v18.2.0 10.2.1.176:5000/quay.io/ceph/ceph:v18.2.0

测试sha256(失败!)

[root@ceph-191 nfsmount]# podman run --rm -it 10.2.1.176:5000/quay.io/ceph/ceph@sha256:7919cf002430693fe20a83cc501a3cf5ec3ffe3cbb0decdf077e1955a1ceb3b4 /bin/true

Trying to pull 10.2.1.176:5000/quay.io/ceph/ceph@sha256:7919cf002430693fe20a83cc501a3cf5ec3ffe3cbb0decdf077e1955a1ceb3b4...

Error: initializing source docker://10.2.1.176:5000/quay.io/ceph/ceph@sha256:7919cf002430693fe20a83cc501a3cf5ec3ffe3cbb0decdf077e1955a1ceb3b4: reading manifest sha256:7919cf002430693fe20a83cc501a3cf5ec3ffe3cbb0decdf077e1955a1ceb3b4 in 10.2.1.176:5000/quay.io/ceph/ceph: manifest unknown: manifest unknown

再次设置container_image(--force成功!)

ceph config set global container_image 10.2.1.176:5000/quay.io/ceph/ceph:v18.2.0 --force

191 mon 自动恢复,一段时间后,该值又自动还原!(分析过程记录)

应该是 10.2.1.176:5000/quay.io/ceph/ceph:v18.2.0 待续。。。。。!!!!

[ceph: root@ceph-176 /]# ceph orch daemon add mon ceph-191:10.2.1.191

卡主了

。。。

神奇的事,191故障,219节点居然能运行,sha256值不对的理解不正确!

一个晚上过去了,container_image又被重置了

219测试页不能pull sha256指定的镜像

猜测:

10.2.1.176:5000/quay.io/ceph/ceph@sha256:7919cf002430693fe20a83cc501a3cf5ec3ffe3cbb0decdf077e1955a1ceb3b4

应该是基于本地的

10.2.1.176:5000/quay.io/ceph/ceph:v18.2.0

镜像,计算来的。

191 podman images

219 podman images

其中10.2.1.176:5000/quay.io/ceph/ceph:v18.2.0 image id 一致。

191 sha256 pull公网资源(成功)

[root@ceph-191 ~]# podman run --rm -it quay.io/ceph/ceph@sha256:7919cf002430693fe20a83cc501a3cf5ec3ffe3cbb0decdf077e1955a1ceb3b4 /bin/true

Trying to pull quay.io/ceph/ceph@sha256:7919cf002430693fe20a83cc501a3cf5ec3ffe3cbb0decdf077e1955a1ceb3b4...

Getting image source signatures

Copying blob 88620ff853b6 done

Copying blob 5487466c90da done

Copying config efeefa2a9c done

Writing manifest to image destination

Storing signatures

成功运行,没有报错!

[root@ceph-191 ~]# podman run --rm -it 10.2.1.176:5000/quay.io/ceph/ceph@sha256:7919cf002430693fe20a83cc501a3cf5ec3ffe3cbb0decdf077e1955a1ceb3b4 /bin/true

[root@ceph-191 ~]#

此时,10.2.1.176:5000上的镜像也不报错了!

但是,podman images没有任何变化!(这是podman aarch64下的bug?)

[root@ceph-191 ~]# podman tag quay.io/ceph/ceph@sha256:7919cf002430693fe20a83cc501a3cf5ec3ffe3cbb0decdf077e1955a1ceb3b4 10.2.1.176:5000/quay.io/ceph/ceph@sha256:7919cf002430693fe20a83cc501a3cf5ec3ffe3cbb0decdf077e1955a1ceb3b4

Error: 10.2.1.176:5000/quay.io/ceph/ceph@sha256:7919cf002430693fe20a83cc501a3cf5ec3ffe3cbb0decdf077e1955a1ceb3b4: tag by digest not supported

[root@ceph-191 ~]# podman images -a

REPOSITORY TAG IMAGE ID CREATED SIZE

quay.io/ceph/ceph v18.2.0 efeefa2a9c12 12 days ago 1.25 GB

10.2.1.176:5000/quay.io/ceph/ceph v18.2.0 efeefa2a9c12 12 days ago 1.25 GB

10.2.1.176:5000/quay.io/ceph/ceph-grafana 9.4.7 dc8d789752ef 8 months ago 676 MB

10.2.1.176:5000/quay.io/prometheus/node-exporter v1.5.0 68cb0c05b3f2 12 months ago 23.2 MB

[root@ceph-191 ~]#

manifest unknown: manifest unknown总结:公网pull一次(podman bug?)

整个集群正常了!

再次添加mon(成功!)

[ceph: root@ceph-176 /]# ceph orch daemon add mon ceph-191:10.2.1.191

Deployed mon.ceph-191 on host 'ceph-191'

[ceph: root@ceph-176 /]# ceph orch daemon add mon ceph-219:10.2.1.219

Deployed mon.ceph-219 on host 'ceph-219'

最终状态

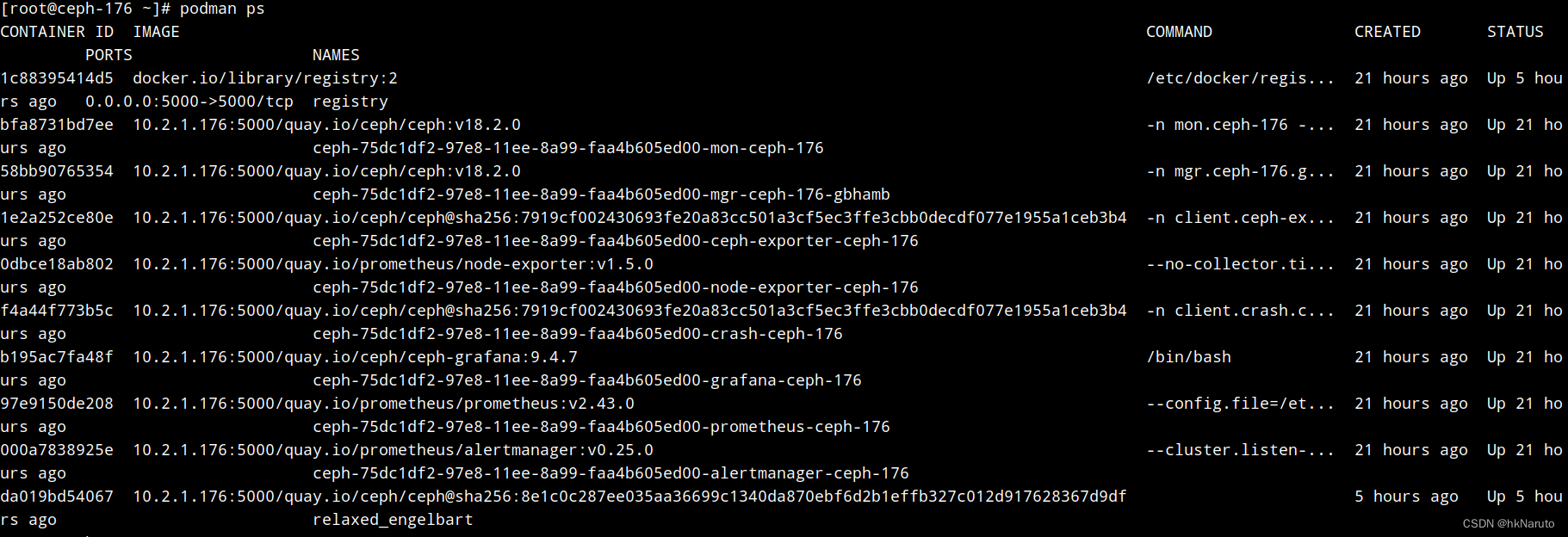

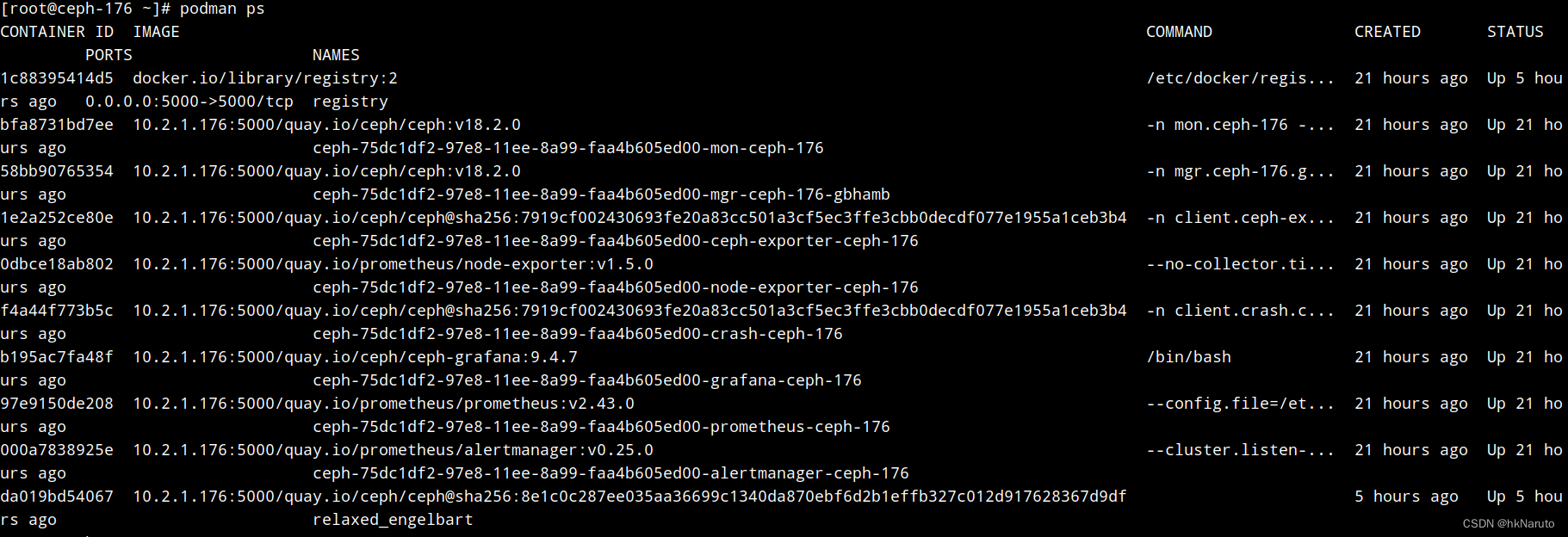

176 podman 容器状态

191 podman容器状态

219容器状态

更多推荐

已为社区贡献3条内容

已为社区贡献3条内容

所有评论(0)